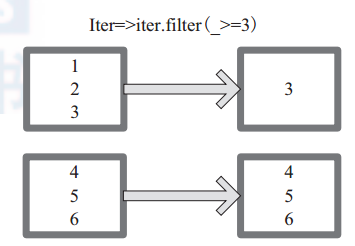

You use “x” after the colon like any other python object – which is why we can split it into a list and later rearrange it. The “x” part is really every row of your data. That’s called an anonymous function (or a lambda function). You’ll notice the “lambda x:” inside of the map function. Taking the results of the split and rearranging the results (Python starts its lists / column with zero instead of one).Using a map to split the data wherever it finds a tab (\t).Next we have to map a couple functions to our data. Now we have to first load the data into spark. I’m working with the MovieLens 100K dataset for those who want to follow along. Imagine you had a file that was tab delimited and you wanted to rearrange your data to be column1, column3, column2. The map (and mapValues) is one of the main workhorses of Spark. The main way you manipulate data is using the the map() function. Instead, it waits for some sort of action occurs that requires some calculation. Spark’s lazy nature means that it doesn’t automatically compile your code. Notice how I used the word “pointing”? Spark is lazy. In this line of code, you’re creating the “mydata” variable (technically an RDD) and you’re pointing to a file (either on your local PC, HDFS, or other data source). Mydata = sc.textFile('file/path/or/file.something') For this post, I’ll be focusing on manipulating Resilient Distributed Datasets (RDDs) and discuss SQL / Dataframes at a later date. I’d like to share some basic pyspark expressions and idiosyncrasies that will help you explore and work with your data. Everything was so simple! With Python (pyspark) on my side, I could start writing programs by combining simple functions. When I started using Spark, I was enamored. They’re nothing like the complicated Java programs needed for MapReduce. When I first started playing with MapReduce, I was immediately disappointed with how complicated everything was. Learn the basics of Pyspark SQL joins as your first foray.

Update: Pyspark RDDs are still useful, but the world is moving toward DataFrames. Each function can be stringed together to do more complex tasks. Summary: Spark (and Pyspark) use map, mapValues, reduce, reduceByKey, aggregateByKey, and join to transform, aggregate, and connect datasets. This entry was posted in Python Spark on Apby Will

0 kommentar(er)

0 kommentar(er)